1School of Information Technology, Deakin University, 2School of Computer Science and Technology, China University of Mining and Technology, 3School of Computer Science and Engineering, Nanyang Technological University, 4Defense Science and Technology Group, Australia

[Paper] [Code] [BibTeX]

ABSTRACT: The quality of point clouds is often limited by noise introduced during their capture process. Consequently, a fundamental 3D vision task is the removal of noise, known as point cloud filtering or denoising. State-of-the-art learning based methods focus on training neural networks to infer filtered displacements and directly shift noisy points onto the underlying clean surfaces. In high noise conditions, they iterate the filtering process. However, this iterative filtering is only done at test time and is less effective at ensuring points converge quickly onto the clean surfaces. We propose IterativePFN (iterative point cloud filtering network), which consists of multiple IterationModules that model the true iterative filtering process internally, within a single network. We train our IterativePFN network using a novel loss function that utilizes an adaptive ground truth target at each iteration to capture the relationship between intermediate filtering results during training. This ensures that the filtered results converge faster to the clean surfaces. Our method is able to obtain better performance compared to state-of-the-art methods. The source code can be found at https://github.com/ddsediri/IterativePFN.

Method

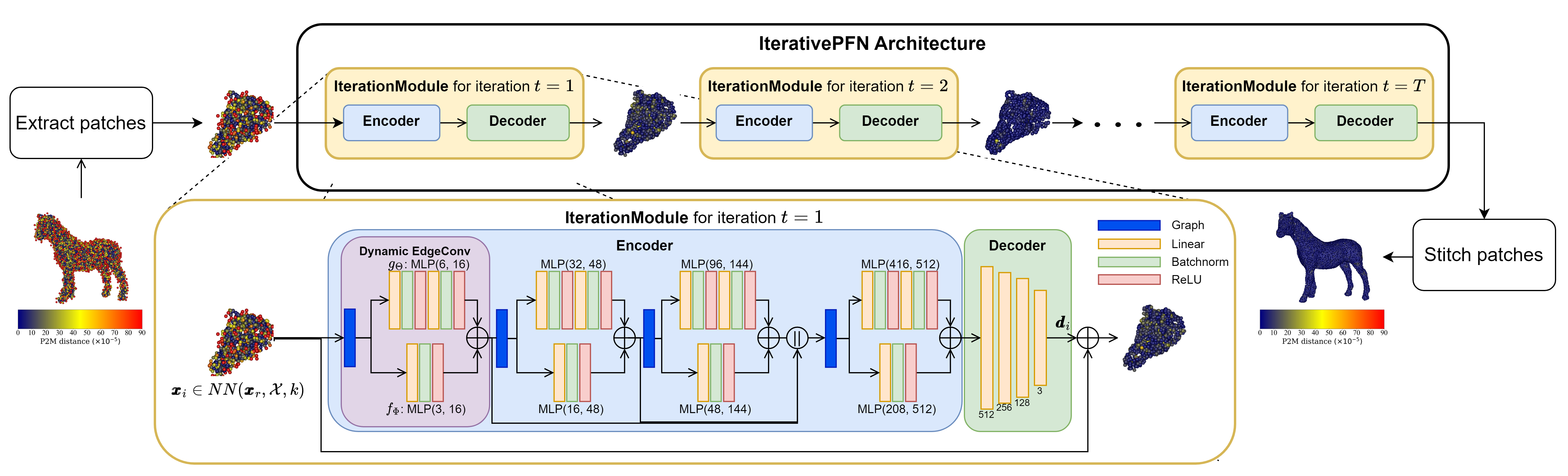

We present IterativePFN, a novel architecture geared towards filtering point clouds. We design IterationModules, that consume directed graphs of point cloud patches to produce rich node (point) features that are then used to generate filtered displacements. These displacements regress noisy points back towards the underlying clean surface. Unlike current methods that only apply iterative filtering at test times, our method extends iterative filtering to a true train + test time solution where the relationships between consecutive, intermediate displacements are learned by the network.

IterativePFN pipeline

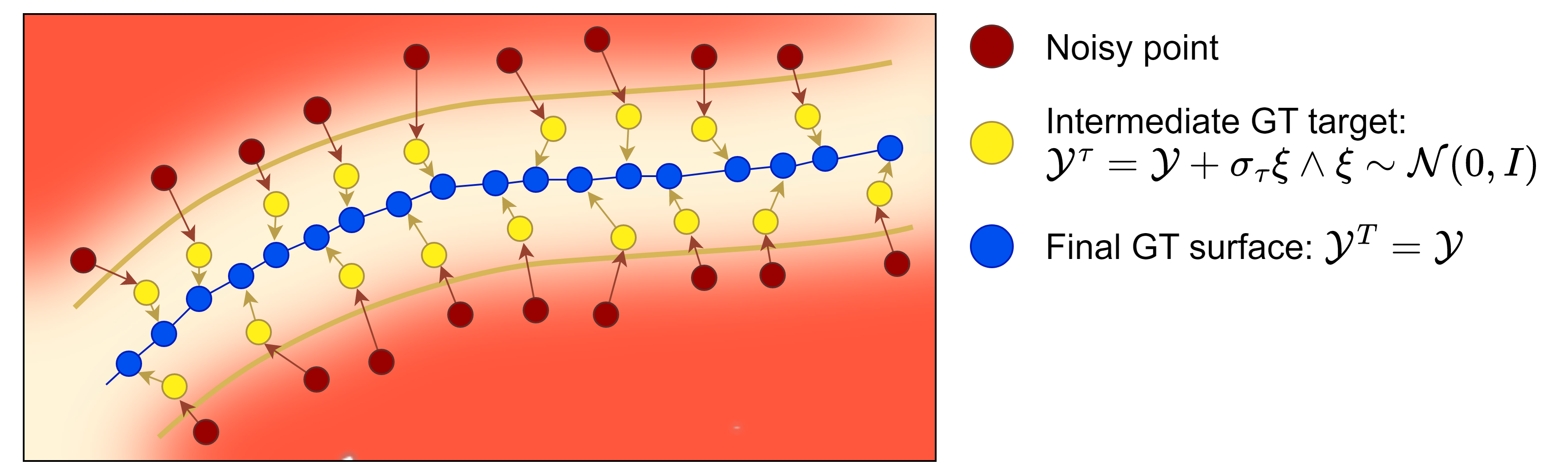

To train our network, we employ a novel adaptive ground truth loss function to supervise training.

Adaptive ground truth loss function

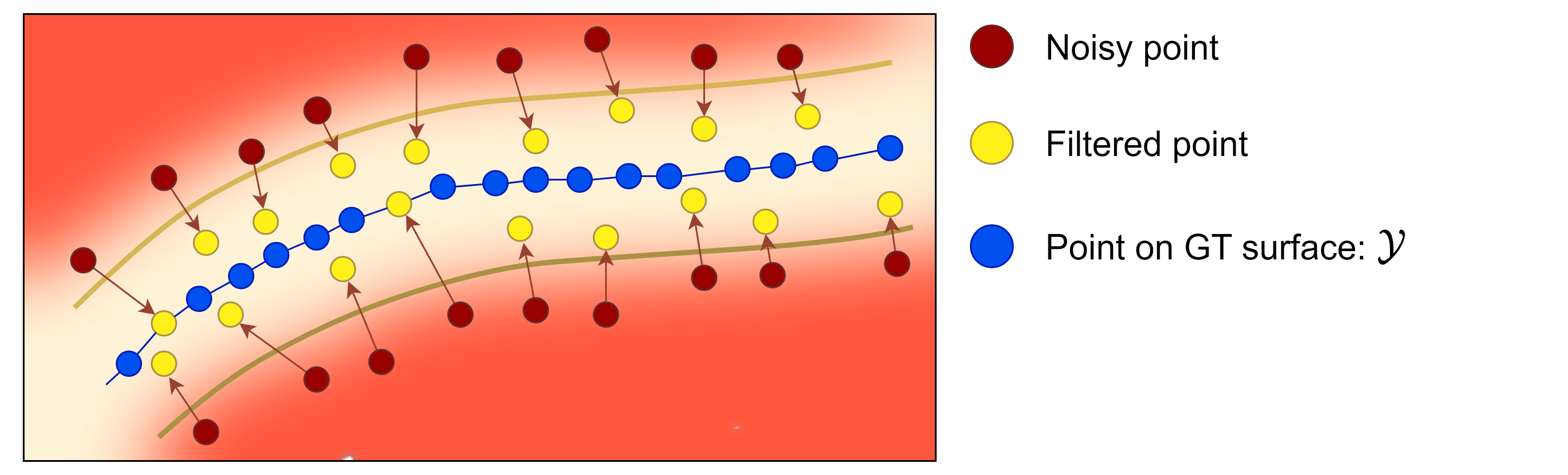

The adaptive ground truth loss function that supervises training is constructed as follows:

Our adaptive ground truth loss, per point, per IterationModule, can be expressed as:

\[\newcommand{\norm}[1]{\left\|#1\right\|} \begin{align} L^{(\tau)}_i(\mathcal{Y}^{(\tau)}) =~&\norm{\pmb{d}^{(\tau)}_i - \big[NN(\pmb{x}^{(\tau-1)}_i, \mathcal{Y}^{(\tau)})-\pmb{x}^{(\tau-1)}_i\big]}^2_2, \notag \end{align}\]Gaussian weights based on position from patch center

\[\begin{align} w_i = \frac{\exp\left(-\norm{\pmb{x}_i-\pmb{x}_r}^2_2/r^2_s\right)}{\sum_i \exp\left(-\norm{\pmb{x}_i-\pmb{x}_r}^2_2/r^2_s\right)}, \notag \end{align}\]Single IterationModule loss \(\leftrightarrow\) weighted average across points

\[\begin{align} L^{(\tau)} = \sum_i w_i L^{(\tau)}_i, \notag \end{align}\]Sum loss contributions across all ItMs \(\rightarrow\) allows joint training

\[\begin{align} \mathcal{L}_a = \sum^{T}_{\tau=1} L^{(\tau)}. \notag \end{align}\]Results